Integration of Large Language Models (LLMs) 2025: A Comprehensive Guide for Developers

Comprehensive guide to integrating OpenAI API and Anthropic Claude, effective prompt engineering, and cost-optimized LLM usage for developers.

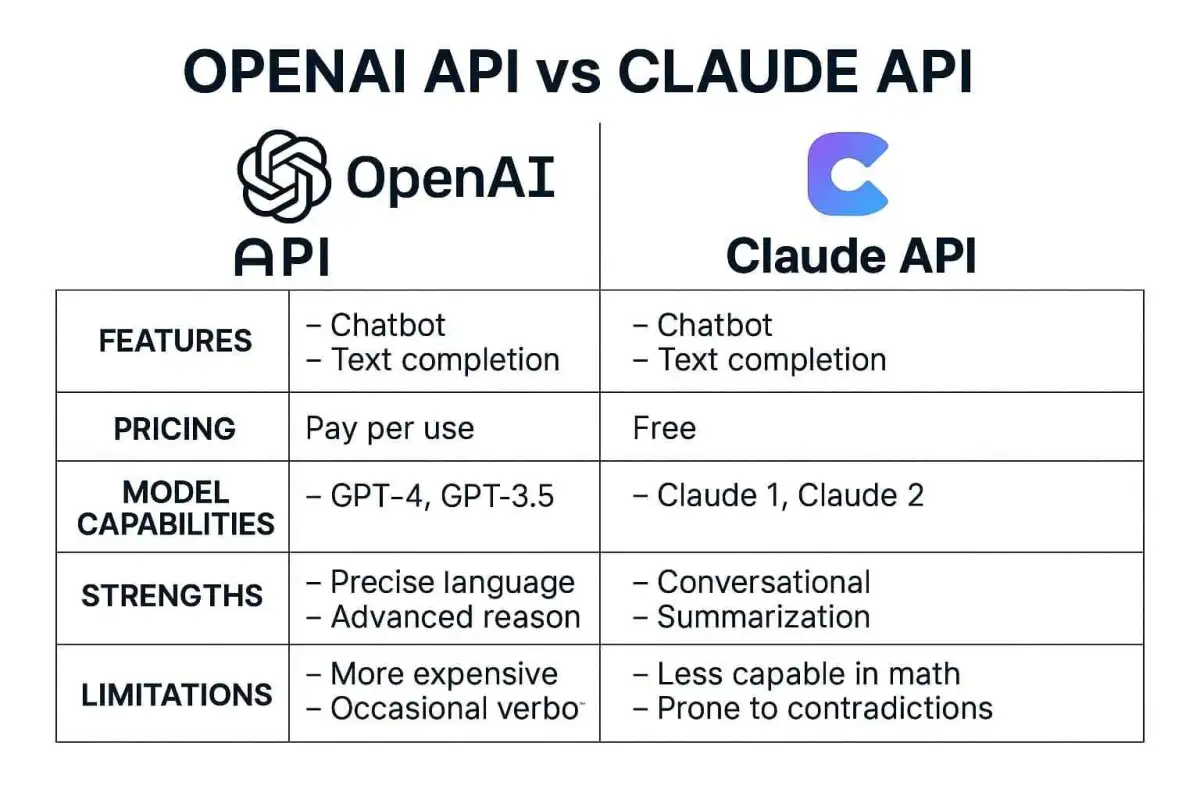

Large Language Models (LLMs) are revolutionizing software development and enabling innovative applications with human-like language capabilities. This comprehensive guide shows developers how to effectively integrate OpenAI and Anthropic Claude APIs, master advanced prompt engineering, and optimize costs.

OpenAI API Integration: From Basics to Implementation

What is the OpenAI API?

The OpenAI API provides developers with access to powerful language models like GPT-4.5 and o1, enabling text understanding, generation, and multimodal capabilities. As a gateway to the most advanced AI models, the API allows integration of these capabilities into your own applications through simple HTTP requests.

New Features in 2025

Responses API with Remote MCP Server Support: In 2025, OpenAI expanded the Responses API with support for Remote Model Context Protocol (MCP) servers, significantly improving integration with external services. This feature enables agent-based applications with enhanced context processing.

Image and Code Generation: The latest updates offer advanced features for image generation and Code Interpreter directly through the API, simplifying the development of multifunctional applications.

Background Mode: For computationally intensive tasks, OpenAI introduced an asynchronous background mode that handles long operations more reliably and provides better control over complex workflows.

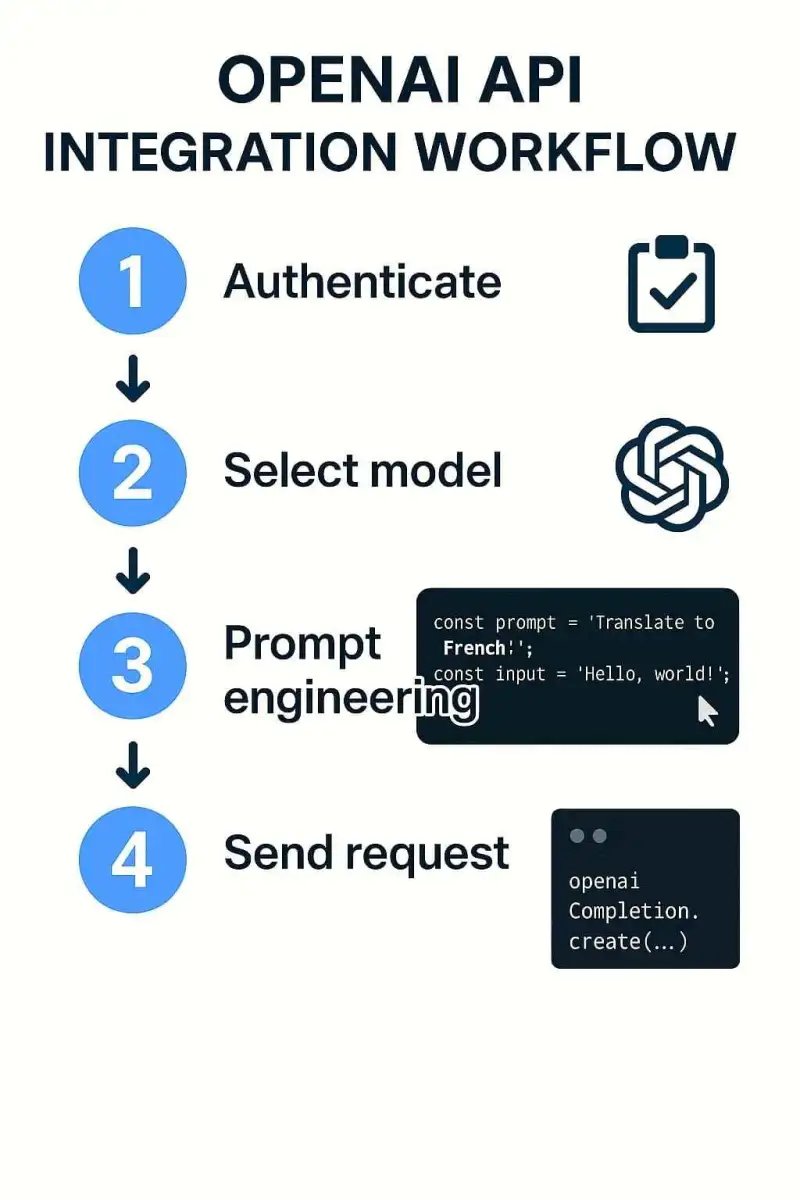

Getting Started with the OpenAI API

Prerequisites

- OpenAI API account and API key

- Basic programming knowledge (Python, JavaScript, etc.)

- Understanding of REST APIs and JSON

# OpenAI API basic integration

import os

from openai import OpenAI

# Initialize client

client = OpenAI(

api_key=os.environ.get("OPENAI_API_KEY")

)

# Send request to the model

response = client.chat.completions.create(

model="gpt-4.5",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain the benefits of APIs in simple terms."}

]

)

# Output response

print(response.choices[0].message.content)Anthropic Claude API: The Innovative Alternative

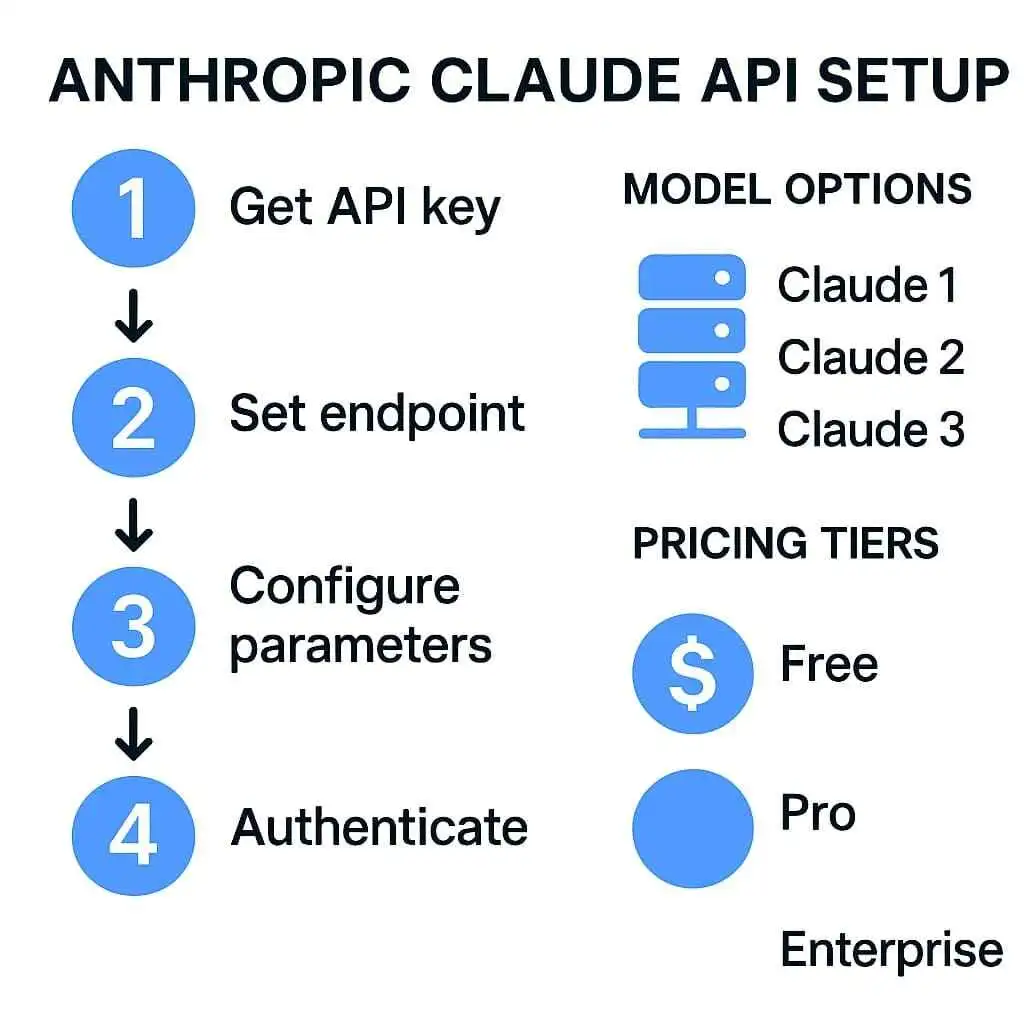

What is the Claude API?

The Anthropic Claude API provides access to the Claude language models, known for their safety alignment, natural understanding, and nuanced text generation. Claude particularly excels at longer conversations, better context processing, and the ability to handle complex tasks.

Claude Models Overview

- Claude 4 Opus: The most powerful model with superior reasoning capabilities, ideal for complex tasks

- Claude 4 Sonnet: Balanced model with a good balance between performance and speed for general enterprise applications

- Claude 3.5 Haiku: Fastest and most cost-effective model for applications with high throughput requirements

# Claude API basic integration

import os

import anthropic

# Initialize client

client = anthropic.Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY")

)

# Send request to Claude

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1024,

messages=[

{"role": "user", "content": "Explain the benefits of AI integrations for developers."}

]

)

# Output response

print(message.content[0].text)Prompt Engineering for Developers

Fundamentals of Prompt Engineering

Prompt engineering is the art and science of creating effective instructions for LLMs to obtain precise and relevant answers. It is a critical factor for successful LLM integrations and directly influences the quality of results and cost efficiency.

Zero-Shot vs. Few-Shot Prompting

Zero-Shot Prompting is the simplest form of prompting, where the model is given an instruction or question without any examples. This works well for simple, general tasks but requires precise wording.

Few-Shot Prompting provides the model with one or more examples of what the desired output should look like. By providing examples, you increase the likelihood that the LLM will solve the task correctly and in the desired format.

Best Practices for Effective Prompt Engineering

1. Clear Context: Provide sufficient background information for the model to understand the request correctly.

2. Specific Instructions: Formulate precisely what you expect from the model, including format, tone, and scope.

3. Break Down Tasks: Split complex tasks into smaller, more manageable steps.

4. Iterative Refinement: Start with simple prompts and refine them based on the results.

5. Consistency in Formatting: Use the same formatting and structure consistently in your prompts.

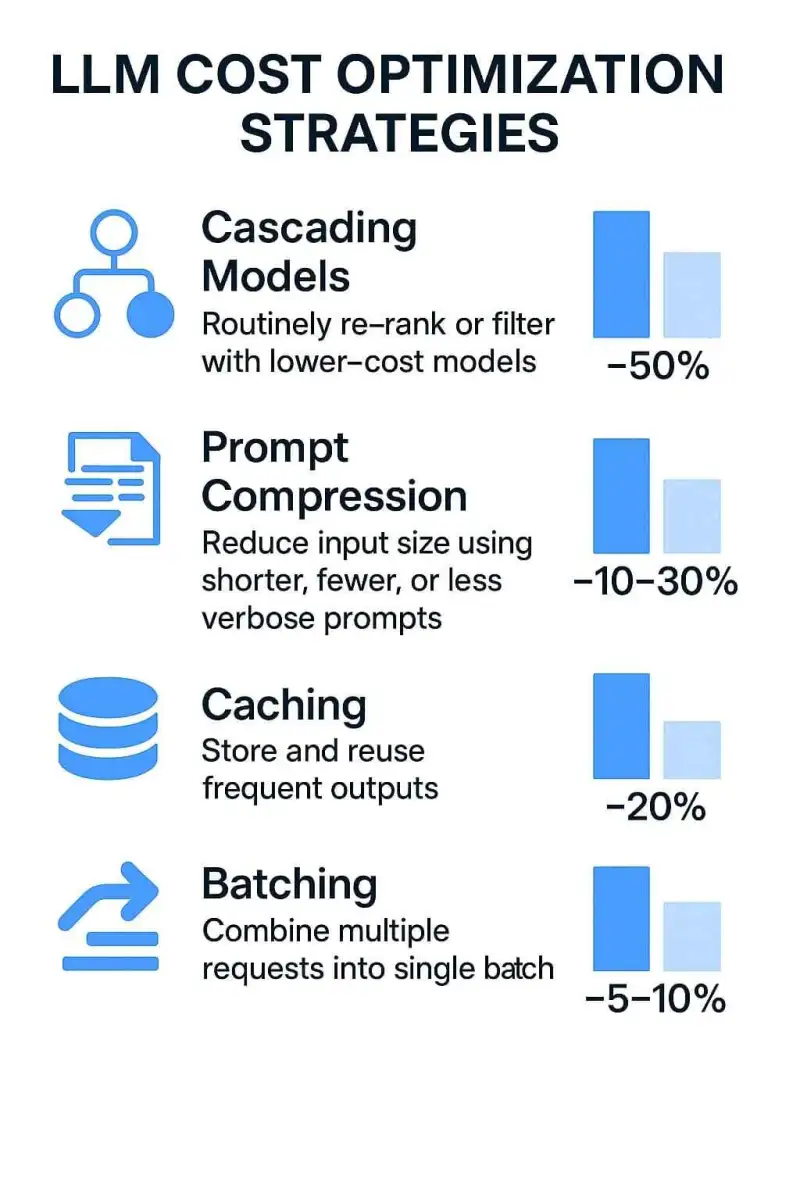

Cost-Optimized LLM Usage

> Challenges of LLM Cost Optimization

The costs of LLM API usage can quickly escalate, especially with high request volumes or complex applications. Main cost factors include:

- Token-based billing (input and output)

- Model selection (larger models = higher costs)

- Context window size (longer contexts consume more tokens)

- Request volume and frequency

Strategy 1: LLM Cascading

LLM Cascading is an effective strategy where queries are processed through a sequence of models, starting with the cheapest and escalating to more powerful models only when needed. With this strategy, cost savings of up to 87% can be achieved, with most requests staying with cheaper models.

Strategy 2: Prompt Optimization and Token Minimization

Since LLM APIs charge per token, reducing tokens directly leads to cost savings. Techniques include:

- Prompt Compression: Removing redundant information

- Precise Instructions: Clear, direct instructions instead of verbose explanations

- Information Prioritization: Important information first to avoid repetition

Strategy 3: Caching and RAG

Semantic Caching stores responses to frequent queries in a cache, allowing immediate retrieval without re-querying the LLM.

Retrieval-Augmented Generation (RAG) supplements LLMs with external data sources, reducing the need for large context windows and potentially reducing token consumption by up to 30%.

Summary and Best Practices

Successful integration of LLMs requires a strategic approach that balances performance, cost, and user-friendliness. Here are the key insights:

- API Selection: Choose the API based on your specific requirements

- Model Strategy: Implement a cascading strategy for optimal cost efficiency

- Prompt Engineering: Invest time in optimizing your prompts

- Cost Management: Set budget limits and implement caching strategies

- Continuous Improvement: Analyze performance and adapt your strategy